Local Host Cache in XenDesktop

Website Visitors:Local Host Cache(LHC) was introduced back in citrix from 7.12 with new improvements. LHC is used to provide apps and desktops to users incase of database failure, with an exception of pooled VDIs. Users can connect to all their applications and VDIs(non-pooled VDIs, MCS and PVS) until database is back. Citrix also brought a new feature called connection leasing but LHC has more features than CL.

Local Host Cache is supported for server-hosted applications and desktops, and static (assigned) desktops; it is not supported for pooled VDI desktops (created by MCS or PVS).

Check out the default CL/LHC options as per versions below:

(*) Depends on the installation type.LHC is available in xendesktop 7.11 but doesnt work as expected. So, consider xendesktop 7.12 when thinking of LHC. XD 7.12 is the official version to support LHC.

The Local Host Cache (LHC) feature allows connection brokering operations in a XenApp or XenDesktop Site to continue when an outage occurs. An outage occurs when:

- The connection between a Delivery Controller and the Site database fails in an on-premises Citrix environment.

- The WAN link between the Site and the Citrix control plane fails in a Citrix Cloud environment.

Local Host Cache is the most comprehensive high availability feature in XenApp and XenDesktop. It is a more powerful alternative to the connection leasing feature that was introduced in XenApp 7.6.

Although this Local Host Cache implementation shares the name of the Local Host Cache feature in XenApp 6.x and earlier XenApp releases, there are significant improvements. This implementation is more robust and immune to corruption. Maintenance requirements are minimized, such as eliminating the need for periodic dsmaint commands. This Local Host Cache is an entirely different implementation technically.

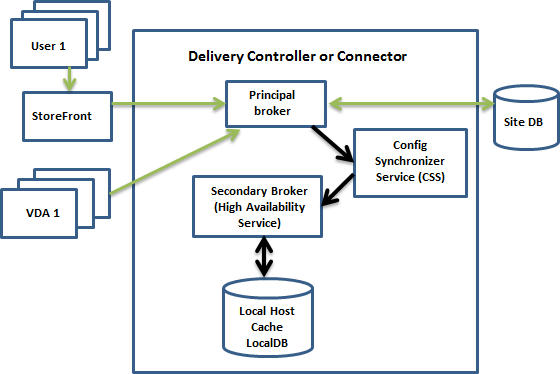

During normal operations:

- The principal broker (Citrix Broker Service) on a Controller accepts connection requests from StoreFront, and communicates with the Site database to connect users with VDAs that are registered with the Controller.

- A check is made every two minutes to determine whether changes have been made to the principal broker’s configuration. Those changes could have been initiated by PowerShell/Studio actions (such as changing a Delivery Group property) or system actions (such as machine assignments).

- If a change has been made since the last check, the principal broker uses the Citrix Config Synchronizer Service (CSS) to synchronize (copy) information to a secondary broker (Citrix High Availability Service) on the Controller. All broker configuration data is copied, not just items that have changed since the previous check. The secondary broker imports the data into a Microsoft SQL Server Express LocalDB database on the Controller. The CSS ensures that the information in the secondary broker’s LocalDB database matches the information in the Site database. The LocalDB database is re-created each time synchronization occurs.

- If no changes have occurred since the last check, no data is copied.

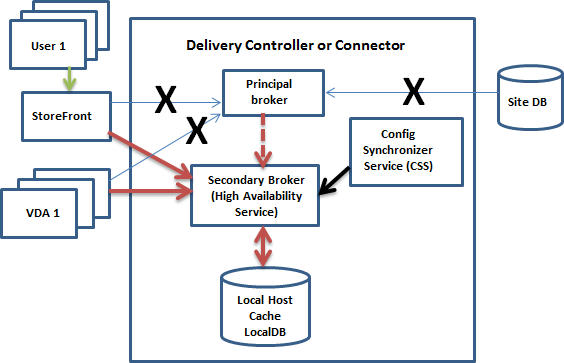

The following graphic illustrates the changes in communications paths if the principal broker loses contact with the Site database (an outage begins):

When an outage begins:

- The principal broker can no longer communicate with the Site database, and stops listening for StoreFront and VDA information (marked X in the graphic). The principal broker then instructs the secondary broker (High Availability Service) to start listening for and processing connection requests (marked with a red dashed line in the graphic).

- When the outage begins, the secondary broker has no current VDA registration data, but as soon as a VDA communicates with it, a re-registration process is triggered. During that process, the secondary broker also gets current session information about that VDA.

- While the secondary broker is handling connections, the principal broker continues to monitor the connection to the Site database. When the connection is restored, the principal broker instructs the secondary broker to stop listening for connection information, and the principal broker resumes brokering operations. The next time a VDA communicates with the principal broker, a re-registration process is triggered. The secondary broker removes any remaining VDA registrations from the previous outage, and resumes updating the LocalDB database with configuration changes received from the CSS.

In the unlikely event that an outage begins during a synchronization, the current import is discarded and the last known configuration is used.

The event log provides information about synchronizations and outages. See the “Monitor” section below for details.

You can also intentionally trigger an outage.

Sites with multiple Controllers

Among its other tasks, the CSS routinely provides the secondary broker with information about all Controllers in the zone. (If your deployment does not contain multiple zones, this action affects all Controllers in the Site.) Having that information, each secondary broker knows about all peer secondary brokers.

The secondary brokers communicate with each other on a separate channel. They use an alphabetical list of FQDN names of the machines they’re running on to determine (elect) which secondary broker will be in charge of brokering operations in the zone if an outage occurs. During the outage, all VDAs re-register with the elected secondary broker. The non-elected secondary brokers in the zone will actively reject incoming connection and VDA registration requests.

If an elected secondary broker fails during an outage, another secondary broker is elected to take over, and VDAs will re-register with the newly-elected secondary broker.

The event log provides information about elections.

What is unavailable or changes during an outage

- You cannot use Studio or run PowerShell cmdlets.

- Hypervisor credentials cannot be obtained from the Host Service. All machines are in the unknown power state, and no power operations can be issued. However, VMs on the host that are powered-on can be used for connection requests.

- Machines with VDAs(Power management enabled) in pooled Delivery Groups that are configured with “Shut down after use” are placed into maintenance mode.

- An assigned machine can be used only if the assignment occurred during normal operations. New assignments cannot be made during an outage.

- Automatic enrollment and configuration of Remote PC Access machines is not possible. However, machines that were enrolled and configured during normal operation are usable.

- Server-hosted applications and desktop users may use more sessions than their configured session limits, if the resources are in different zones.

- Monitoring data is not sent to Citrix Cloud during an outage. So, the Monitor functions (Director) do not show activity from an outage interval.

- An assigned machine can be used only if the assignment occurred before the outage. New assignments cannot be made during an outage.

- Elections – When the zones loses contact with the SQL database, an election occurs nominating a single delivery controller as master. All remaining controllers go into idle mode. A simple alphabetical order determines the winner of the election (based on alphabetical list of FQDN names of registered Delivery Controllers).

- VDI limits:

- In a single-zone VDI deployment, up to 10,000 VDAs can be handled effectively during an outage.

- In a multi-zone VDI deployment, up to 10,000 VDAs in each zone can be handled effectively during an outage, to a maximum of 40,000 VDAs in the site.

Ram Size:

LocalDB service can use upto 1.2 GB more ram than normal situation when LHC is enabled and in an outage. So, when you have an outage, and you have LHC enabled, remember to increase RAM to your citrix delivery controllers.

CPU:

LocalDB would utilize more CPU than normal situations in an outage when LHC is enabled. Note that adding more sockets will not improve LHC performance. Add cores and then increase sockets.

Citrix recommends using multiple sockets with multiple cores. In Citrix testing, a 2x3 (2 sockets, 3 cores) configuration provided better performance than 4x1 and 6x1 configurations.

Storage:

When users try to connect to their applications in a database failure, LHC brokers apps/Desktops to users. In this case, for every 10 user logons per second, till 2-3 minutes, one MB space is occupied by LHC.

In normal scenario ie., when DB is available, LHC is flushed and re-created everytime there is a change in DB and the storage is returned back to OS.

Performance

During an outage, one broker handles all the connections, so in Sites (or zones) that load balance among multiple Controllers during normal operations, the elected broker might need to handle many more requests than normal during an outage. Therefore, CPU demands will be higher. Every broker in the Site (zone) must be able to handle the additional load imposed by LocalDB and all of the affected VDAs, because the broker elected during an outage could change.

Note: In xendesktop 7.12 release, up to 5,000 VDAs can be handled effectively during an outage.

During an outage, load management within the Site may be affected. Load evaluators (and especially, session count rules) may be exceeded.

During the time it takes all VDAs to re-register with a broker, that broker might not have complete information about current sessions. So, a user connection request during that interval could result in a new session being launched, even though reconnection to an existing session was possible. This interval (while the “new” broker acquires session information from all VDAs during re-registration) is unavoidable. Note that sessions that are connected when an outage starts are not impacted during the transition interval, but new sessions and session reconnections could be.

This interval occurs whenever VDAs must re-register with a different broker:

- An outage starts: When migrating from a principal broker to a secondary broker.

- Broker failure during an outage: When migrating from a secondary broker that failed to a newly-elected secondary broker.

- Recovery from an outage: When normal operations resume, and the principal broker resumes control.

LHC comparison: XenDesktop 7.15 vs XenApp 6.5

Although Local Host Cache implementation in XenDesktop 7.15 (to be more precise, from version 7.12) shares the name of the Local Host Cache feature in XenApp 6.x, there are significant differences which you should be aware of.

Advantages:

- LHC is supported for on-premise and Citrix Cloud installations

- LHC implementation in XenDesktop 7.15 is more robust and immune to corruption

- Maintenance requirements are minimized, such as eliminating the need for periodic dsmaint commands

Disadvantages:

- Local Host Cache is supported for server-hosted applications and desktops, and static (assigned) desktops; it is not supported for pooled VDI desktops (created by MCS or PVS).

- No control on Secondary Broker election – election is done based on alphabetical list of FQDN names of registered Delivery Controllers. Election process is described in details below.

- Additional compute resources in the sizing for all Delivery Controllers must be included.

Local Host Cache vs Connection leasing – highlights

- Local Host Cache was introduced to replace Connection Leasing, which will be removed in the next releases !

- Local Host Cache supports more use cases than connection leasing.

- During outage mode, Local Host Cache requires more resources (CPU and memory) than connection leasing.

- During outage mode, only a single broker will handle VDA registrations and broker sessions.

- An election process decides which broker will be active during outage, but does not take into account broker resources.

- If any single broker in a zone would not be capable of handling all logons during normal operation, it won’t work well in outage mode.

- No site management is available during outage mode.

- A highly available SQL Server is still the recommended design.

- For intermittent database connectivity scenarios, it is still better to isolate the SQL Server and leave the site in outage mode until all underlying issues are fixed.

- There is a limit of 10 000 VDAs per zone.

- There is no 14-day limit.

- Pooled desktops are not supported in outage mode, in the default configuration.

More info on LHC, how to monitor, troubleshoot options are given here: https://docs.citrix.com/en-us/xenapp-and-xendesktop/7-12/manage-deployment/local-host-cache.html

Troubleshooting local host cache issues in Citrix 7.x environments

With the release of XenApp 7.1 a change to the FMA architecture meant that the local host cache (LHC) was effectively done away with. Over the course of the many upgrades in the 7.x version (up to version 13 at time of writing) citrix more and more re-integrated the “features” that came with the original IMA local host cache. With version 7.12 effectively it was restored to its former glory.

That said, in order to troubleshoot issues relating to synchronization it was quite different from 6.x. With 7.x a few different tools were introduced. Following article gives a nice overview of the architecture.

https://docs.citrix.com/en-us/xenapp-and-xendesktop/7-12/manage-deployment/local-host-cache.html

Relating to troubleshooting tools the pertinent information :

-

CDF tracing: Contains options for the ConfigSyncServer and BrokerLHC modules. Those options, along with other broker modules, will likely identify the problem.

-

Report: You can generate and provide a report that details the failure point. This report feature affects synchronization speed, so Citrix recommends disabling it when not in use.

To enable and produce a CSS trace report, enter:

[code lang=“powershell”] New-ItemProperty -Path HKLM:\SOFTWARE\Citrix\DesktopServer\LHC -Name EnableCssTraceMode -PropertyType DWORD -Value 1 [/code]

The HTML report is posted at C:\Windows\ServiceProfiles\NetworkService\AppData\Local\Temp\CitrixBrokerConfigSyncReport.html

After the report is generated, disable the reporting feature:

Set-ItemProperty -Path HKLM:\\SOFTWARE\\Citrix\\DesktopServer\\LHC -Name EnableCssTraceMode -Value 0

Export the broker configuration: Provides the exact configuration for debugging purposes.

Export-BrokerConfiguration | Out-File <file-pathname>

For example, Export-BrokerConfiguration | Out-File C:\BrokerConfig.xml.

Want to learn more on Citrix Automations and solutions???

Subscribe to get our latest content by email.