XenServer N/W defs

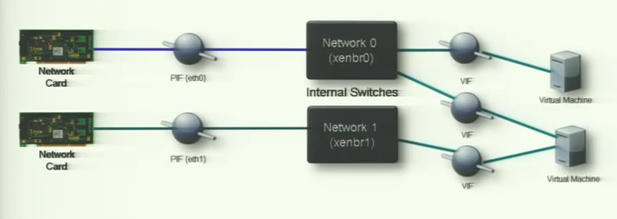

Website Visitors:When we are talking about setting up networks and configure them we need to understand how they are named. XenServer knows 3 types of objects, the physical network interface, the virtual one and the network itself.

-

PIF: This is the object name for a physical network interface. XenServer is based on linux. This means each physical network interface will get the normal linux name ethX. XenServer will create a PIF object and assigned to each ethX device. Each PIF will get his unique ID, uuid, to give him an internal identifier.

-

VIF: This is the virtual interface card of a virtual machine. A VIF will get a_uuid_ to give him an internal unique identifier. A VIF is connected to a network, which can be either internal or external. You can configure QoS parameters for each VID.

-

Network: A network is actually a virtual Ethernet switch with bridging functionality. The switch will get a uniqie uuid and name. The basic virtual switch that you will get with a standard XenServer installation acts like a simple physical switch and allows to connect virtual machines to it via their VIF. A virtual switch can provide an uplink via a PIF to create an external network.

As we can see in the above picture, for every network card, a PIF will be created(for every eth0, eth1…..). For every PIF a bridge will be created and VIF is available on bridge. So, For example, a vm communicates with its VIF, passes through xenbrX and through the network card and to the outside internet(public internet AKA, external network)

When you move a managed server into a Resource Pool, these default networks are merged so that all physical NICs with the same device name are attached to the same network. Whenever a physical nic is connected to a xenserver, a new PIF is created. Nic0, Nic1 etc.. So, when two xenserver hosts are added to pool, nic0 of xenserver1 and nic0 of xenserver2 are treated as one. Similarly nic1 of xenserver1 and nic1 of xenserver2 are treated as one. Typically, you would only need to add a new network if you wished to create an internal network, to set up a new VLAN using an existing NIC, or to create a NIC bond. You can configure up to 16 networks per managed server, or up to 8 bonded network interfaces.

Jumbo frames can be used to optimize performance of storage traffic. You can set the Maximum Transmission Unit (MTU) for a new server network in the New Network wizard or for an existing network in its Properties window, allowing the use of jumbo frames. The possible MTU value range is 1500 to 9216.

Network types

There are four different physical (server) network types to choose from when creating a new network within XenCenter.

Single-Server Private network

This is an internal network that has no association with a physical network interface, and provides connectivity only between the virtual machines on a given server**(only for given XenServer host. Not between different xenserver hosts)**, with no connection to the outside world.

Cross-Server Private network

This is a pool-wide network that provides a private connection between the VMs within a pool, but which has no connection to the outside world. Cross-server private networks combine the isolation properties of a single-server private network with the ability to span a resource pool**(connectivity between multiple xenserver hosts in a pool)**. This enables use of VM agility features such as XenMotion live migration and Workload Balancing (WLB) for VMs with connections to cross-server private networks. VLANs provide similar functionality though unlike VLANs, cross-server private networks provide isolation without requiring configuration of the physical switch fabric, through the use of the Generic Routing Encapsulation (GRE) IP tunneling protocol. To create a cross-server private network, the following conditions must be met:

- all of the servers in the pool must be using XenServer version 5.6 Feature Pack 1 or greater;

- all of the servers in the pool must be using Open vSwitch for networking;

- the pool must have a vSwitch Controller(Distributed vSwitch Controller) configured that handles the initialization and configuration tasks required for the vSwitch connection (this must be done outside of XenCenter).

External network

This type of network has an association with a physical network interface and provides a bridge between virtual machines and your external network, enabling VMs to connect to external resources through the server’s physical network interface card.

Bonded network

This type of network is formed by bonding two or more Physical NICs to create a single, high-performing channel that provides connectivity between VMs and your external network. Three bond modes are supported:

- Active-active – In this mode, traffic is balanced between the bonded NICs. If one NIC within the bond fails, all of the host’s network traffic automatically routes over the second NIC. This mode provides load balancing of virtual machine traffic across the physical NICs in the bond.

- Active-passive (active-backup) – Only one NIC in the bond is active; the inactive NIC becomes active if and only if the active NIC fails, providing a hot-standby capability.

- Link Aggregation Control Protocol (LACP) Bonding – This mode provides active-active bonding, where traffic is balanced between the bonded NICs. Unlike the active-active bond in a Linux bridge environment, LACP can load balance all traffic types. Two available options in this mode are:

- LACP with load balancing based on source MAC address – In this mode, the outgoing NIC is selected based on the MAC address of the VM from which the traffic originated. Use this option to balance traffic in an environment where you have several VMs on the same host. This option is not suitable if there are fewer VIFs than NICs: as load balancing is not optimal because the traffic cannot be split across NICs.

- LACP with load balancing based on IP and port of source and destination – In this mode, the source IP address, the source port number, the destination IP address, and the destination port number are used to route the traffic across NICs. This option is ideal to balance traffic from VMs and the number of NICs exceeds the number of VIFs. For example, when only one virtual machine is configured to use a bond of three NICs.

Notes

- You must configure vSwitch as the network stack to be able to view the LACP bonding options in XenCenter and to create a new LACP bond. Also, your switches must support the IEEE 802.3ad standard.

- Active-active and active-passive bond types are available for both the vSwitch and Linux bridge.

- You can bond either two, three, or four NICs when vSwitch is the network stack, whereas you can only bond two NICs when Linux bridge is the network stack.

For more information about the support for NIC bonds in XenServer, see the XenServer Administrator’s Guide.

Posted in Citrix edocs

How XenServer Handles VM Traffic

• The physical NIC is in promiscuous mode; which means that it would accept all the packets flowing on the wire.

• The packet is forwarded to the Virtual switch xenbr0 • The switch would look at the destination MAC address and find the VIF to which it is connected.

• Once the VIF is determined, the Xenserver then passes it to the Virtual Machine

For each physical NIC an external network is created and also a network device with the name xenbrX. This is an encapsulation to represent the virtual switch to the Linux kernel and use his bridging functionality. Internal networks and VLAN networks are creating a xapiX device. You will not see the internal network device in the _ifconfig,_but the VLAN network with a physical NIC assignment you will see.

Virtual Switch

The virtual switch implemented in XenServer acts as an Layer 2 switch. This means it operates on the Data Link Layer from the OSI reference model. This said the communication between the objects is based on MAC addresses rather than IP addresses. The virtual switch does not provide routing functionality.

XenServer is implemented in the way that a virtual machine, that is connected to only one virtual switch can only communicate with interfaces that are also connected to this virtual switch. A communication between virtual switches is not possible. If a virtual machine has more than one VIF, connected to different virtual switches, routing between internal networks is possible. If a virtual switch is connected to a PIF, the virtual machines can communicate with other servers outside of the XenServer host and also receive packets.

The virtual switch is offering bridging and works on Layer 2, there is no IP address required for the switch to work as virtual switch for virtual machines. The IP configuration is configured inside of virtual machines. The virtual switch transports the data packages based on the MAC addresses. If the switch is connected to a PIF the switch get the MAC address from the physical interface, but does not have an IP address. The management (Primary) or a storage interface (Storage) requires an IP address. In this case XenServer will assign an IP address to the PIF/network. This said the network will get an IP Address assigned to it, a network can have only 1 IP address.

The virtual switch will learn the MAC addresses through packages that are sent and received from connected virtual machines. Through this process the switch learns on which port which MAC address he will find and send data frames directly to them after the learning. If we have an uplink via a PIF, the switch will send a frame through the uplink if he can’t find a matching receiver directly connected to it. The PIF is connected to a physical switch, the port the PIF is connected should be configured as trunk port. This will mark this port to be a receiver for more than one MAC addresses. The physical switch will learn, that this MAC addresses belong to this port. The PIF itself needs to be configured to accept data frames not just for his MAC address. This mode is called promiscuous, the configuration is done by XenServer automatically.

Complication are arising when you live migrate a virtual machine to a different host. This host has a different virtual switch and the PIF is connected to a different port on the same or different physical switch. Before the virtual machine is fully migrated a gratuitous ARP packet is sent so that each external device is able to learn and refreshes the MAC address cache. After that the migrated virtual machine can communicate with outside world, but more important can receive data packets.

The virtual switch itself does not offer advanced options like most physical switches do. The virtual switch is a pretty simple bridging switch. There is only a basic QoS functionality that you can assign to each individual VIF and limit the bandwidth. If you look for more functionality with a full multi layer stack, you need to look at the Open vSwitch project.

VLAN Configuration

We spoke a little bit about VLAN earlier. Creating a VLAN network is easy and must be connected to a PIF. The physical switch port from the physical NIC must be configured for these VLAN.

Creating such a network is doing actually two things. First it creates a ethX.Y Linux network device. EthX for the selected physical NIC and the Y for the VLAN number you select. The ethX.Y device has the same MAC address as the ethX device. On top of that a new network device is created to represent the virtual switch with the name_xapiX_.

Virtual machines connected to a VLAN configured switch are not aware that they are connected to a VLAN network. The virtual switch is tagging and untagging packets automatically and fully transparent for the virtual machine.

The management (Primary) interface does not support VLAN enabled network. To use a VLAN network we need the support of the physical switch to tag the packet for us. If we want to have the management traffic and a VLAN virtual machine network over the same interface the physical switch needs to be configured for the management interface as the native VLAN and the others as allowed VLANs. This will path through the configured VLANs and will tag each untagged package with the native VLAN.

You can define a dedicated storage interface and assign it to a VLAN configured network. In a later post we will discuss the storage network in more detail.

Network Interface Cards in Unmanaged Mode

Every NIC that is installed in your server will get a PIF representation. On top of a PIF XenServer creates a Network, which we know is a virtual switch. Virtual machines can be connected to these networks. This is the normal behaviour. But this means also, that if you want to connect to a shared storage for example you can’t prevent that the same network card can be used for virtual machines. The same situation we have for the primary management interface.

To prevent a physical interface to show up as a network, we need to put it into_unmanaged mode_. After we have done that the NIC does not have a PIF representation and no network assigned to it. Unfortunately we cannot do that within XenCenter. First you need to run the CLI command xe pif-forget and than configure the network card ethX manually inside of the console.

The basic idea is that you want to separate the virtual machine traffic from any management traffic, especially the storage network. Putting a network card into the unmanaged mode supports this separation and is from my point of view recommended if you use shared storage.

Here is a PDF from citrix which explains networking terms along with Distributed Virtual Switch Controller.

Here is a good article which explains Networking in Linux.

Want to learn more on Citrix Automations and solutions???

Subscribe to get our latest content by email.